The purpose of this post is to demonstrate how to include the BSP library in a project and program the e-paper on an STM32L053-Discovery board. This method of including the BSP library is a modified version of the method described in the reference below.

References:

- https://community.st.com/t5/stm32cubemx-mcu/how-to-add-bsp-library-cubemxide/m-p/366692#M19860

- UM1775 – User manual – Discovery kit with STM32L053C8 MCU

- AN4500 – How to display size-optimized pictures on a 4-grey level E-Paper from STM32 embedded memory

- Specification for 2.1″EPD, Model No. : GDE021A1, Smart Prototyping (smart-prototyping.com)

Versions Used:

- STM32CubeIDE version 1.13.0

- STM32Cube MCU Package for STM32L0 series version 1.12.0

In order to write to the e-paper included with the STM32L053-Discovery board, we need to connect the e-paper and microcontroller with an SPI for transmitting commands and data and a few GPIO lines for control. The needed connections are shown in the illustration below from application note AN4500 published by STMicroelectronics.

But we are lucky because all of this plus much more is taken care of by the BSP library distributed by STMicro.

Steps

- Get BSP Library

- Create Project with STM32CubeIDE

- Configure Project

- Add BSP Library to Project

- Fix and Compile

- Create Bitmap

- Add Code to Upload Image to e-paper

Get BSP Library

The BSP library is included in STM32Cube MCU Package for STM32L0 series.

Download it from STMicro:

https://www.st.com/en/embedded-software/stm32cubel0.html

Un-zip the file. The BSP library for the STM32L053-Discovery board is in this folder:

..\en.stm32cubel0_v1-12-0\STM32Cube_FW_L0_V1.12.0\Drivers\BSP\STM32L0538-Discovery

It contains the following files:

There are some other files needed, but they are also included with the library.

Create New Project with STM32CubeIDE

Start STM32CubeIDE.

Create a new project by selecting File -> New -> STM32 Project from the menu:

This will open the STM32 Project – Target Selection window:

Select the MCU/MPU Selector tab (this is the default).

Find your processor using the filters, by paging down in the MCUs/MPUs List panel, or by typing the part number in the Commercial Part Number text box (you only need to type a distinguishing portion of the part number, L053, in this case. See below).

Select the specific part number in the MCUs/MPUs List panel:

Select Next.

A further STM32 Project window will open:

Enter a project name and leave everything else as shown above.

Select Finish.

Once the project has been created, the project should open to the Device Configuration Tool:

If the Device Configuration Tool does not open, RMB on the E-Paper_BSP.ioc file in the Project Explorer and select Open from the context menu:

Configure Project

Steps

- Enable serial wire debug (optional)

- Enable SPI1

Enable Serial Wire Debug

Under Categories, select System Core -> SYS:

In the SYS Mode and Configuration panel, select Debug Serial Wire.

Leave everything else as default.

In the Pinout view panel, pins PA13 and PA14 will now be green and assigned for serial debug.

Enable SPI1

While still in the Device Configuration Tool, under Categories, select Connectivity -> SPI1:

Under SPI1 Mode and Configuration, change Mode to anything but disable:

Note that pins PA5 and PA7 have now been assigned to the SPI1 interface (other modes may initiate more pins).

Do not make any other changes to the SPI1 configuration. The BSP initialization function, BSP_EPD_Init(), will configure SPI1 for communication with the e-paper.

The reason we enabled SPI1 is:

- to ensure that stm32l0xx_hal_spi.h and stm32l0xx_hal_spi.c are included in folder Drivers -> STM32L0xx_HAL_Driver. These are needed by the BSP library, but are not included in the Drivers folder unless one of the SPI communication ports is enabled.

- so that HAL_SPI_MODULE_ENABLED will be defined in stm32l0xx_hal_conf.h and file stm32l0xx_hal_spi.h will be included in the correct place in that file.

Snippets from stm32l0xx_hal_conf.h with SPI1 enabled:

Of course, this is a ridiculous way to do it, but otherwise you will need to fight STM32CubeIDE to do it manually.

No other configuration of SPI1 is needed since this will be done within BSP. Note that you could just as well enable SPI2 because that will also achieve the same.

BSP initialization will also configure GPIO pins for e-paper communication and control.

Save the project (ctrl-s). This will save the project and also auto-generate code.

Add BSP Library to Project

Using Windows File Explorer, find the Drivers folder in the package downloaded above:

Using another instance of Windows File Explorer, find the STM32CubeIDE workspace and open the Drivers folder inside the project folder:

Copy the BSP folder from the downloaded package and paste it in the project Drivers folder:

Open up the BSP folder:

Delete the un-needed folders:

From within STM32CubeIDE, RMB on project name and select Refresh from the context menu:

The new folders should now be visible in the Project Explorer:

RMB on project name and select Properties from the context menu. This will open the project properties window:

Select C/C++ General -> Paths and Symbols from the tree:

Select the Includes tab and then select Add to add a new directory. An Add directory path window will open:

From the Add directory path window, select Add to all configurations and then select Workspace…

This will open a Folder selection window showing all of the projects in the workspace (only one, in this case):

Expand the tree to reveal the project folder structure:

Select the BSP folder from the tree and then select OK to select the folder and return to the Folder selection window:

The Directory text box will now be populated with the path to the selected folder.

Select OK to return the Properties window:

In the same way, add the BSP/STM32L0538-Discovery path:

Select Apply and Close. The following dialog will open (unless you already selected the Remember my decision option previously):

Select Rebuild Index to rebuild the index and return to the Device Configuration Tool window:

Save the project (ctrl-s).

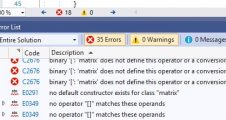

Fix and Compile

As configured, the project will not compile without error.

The problem is that there are multiple definitions of the fonts used in the BSP library (used to write text to the e-paper).

The easiest way to get around this issue is to comment out some include directives for font .c files in the stm32l0538_discovery_epd.h file. Like this:

Now the project will compile.

Create Bitmap

I created a bitmap suitable for the e-paper using GIMP.

Find an image that has a resolution the same or higher than the e-paper (72 x 172 pixels). Any format that GIMP can open will do. I just saved a screenshot as a .png file.

Open the file in GIMP:

(optional) Save the image in GIMP’s native image format (.xcf). To do this, select File -> Save (or ctrl-s):

Select Save to save the file.

Rotate the image by selecting Image -> Transform -> Rotate 90° clockwise:

The image after rotation:

Scale the image to match the e-paper resolution.

From the menu, select Image -> Scale Image – this will open the Scale Image window:

Change the Image Size units to px for pixels (was the default for me). The aspect ratio of the e-paper (172 px/72 px = 2.3889 ) and the image (677 px/412 px = 1.6432) do not match. Unless you don’t mind distorting the image, then some part of the image will be lost.

With the aspect ratio locked (the chain-link between the Width and Height should appear as shown above), try changing the width to 72 pixels. Press the Tab key or select the Height text box with the mouse to update the calculated Height pixel count:

The calculated Height pixel count is now less than 172 pixels. No good. We need 72 x 172.

Try changing the height to 172:

Okay, that works. We can just crop the image to 72 x 172. That’s next. For now, select Scale to scale the image and return to the main window:

Zoom in by holding ctrl and rotating the mouse wheel (pan by pressing the mouse wheel):

Select the crop tool (indicated by red rectangle below) or press shift-s:

With the crop tool selected, create a rough crop selection by clicking and dragging the mouse. The crop does not have to be accurate at this point:

Be sure that the position and size units are pixels (px), and then change the crop tool parameters to position (0, 0) and size (72, 172) as shown below:

The area of the image that will be cropped is shown as grayed:

To center the crop (if you wish to do so), increase the x-value of Position:

Double-click inside the rectangle to complete the crop:

The image resolution is now 72 pixels by 172 pixels. To save a copy in .xbm-format, select File -> Export from the menu:

You can change the name of the file by entering a new name in the Name text box (as was done above).

Expand Select File Type (By Extension) and scroll down to find the .xbm format:

Select the X BitMap image (xbm,icon,bitmap) format and then select Export. An options window will open:

Leave all options as default and select Export. The file should now be saved.

You can open the .xbm file with GIMP:

Obviously not a great choice of image, but good enough to demonstrate the method. And all that effort to carefully scale and crop the image without distortion!

This is what the file looks like in Notepad:

Add Code to Upload Image to e-paper

We need to add the project the contents of the .xbm file created above and other code to initialize the e-paper and upload the image.

In STM32CubeIDE, open the project’s main.c file. Scroll down to the user-code, private variable section:

Copy and paste the contents of the .xbm file between the BEGIN and END comments:

I renamed the image variable. You can delete the width and height definitions, if you wish, as they will not be used.

Open the main.h file and scroll down to the user-includes section:

Add #include “stm32l0538_discovery_epd.h” between the BEGIN and END comments, as shown above. This will add declarations for the functions needed to initialize the e-paper and to upload the image.

In the main.c file, scroll down to the user-code, section 2 in the main function:

Add BSP_EPD_INIT(); between the BEGIN and END comments, as shown above. This function will initialize the e-paper.

Now add this code:

BSP_EPD_Clear(EPD_COLOR_WHITE);

BSP_EPD_DrawImage(1, 1, 72, 172, image);

BSP_EPD_RefreshDisplay();

between the user-code while BEGIN and the while statement:

Now build the project.

Once the build is compete, run the project. The first time you run the project, a launch configuration window will open:

Keep the default settings and select OK.

Once the program has downloaded to the board, the screen should flash (that’s the BSP_EPD_Clear() function), and the hideous image should load:

But I prefer this image: